Our partners, whether they are schools, local education agencies (LEAs), or foundations, want to spend less time on data organization, and more time on improving and understanding student outcomes. When we work with foundations and other non-profits in particular, we often find they may not have access to student-level data (especially personally identifiable information, or PII) or they are unable to share these data with external stakeholders. As a result, we’ve identified a substantial need in the field for analytics rooted in publicly available data.

In order to use publicly available data across a broad range of geographic locations and data types, we needed a cross-team solution. As a non-profit with technical expertise, EA often takes on the initial burden of standardizing & warehousing data in order to realize long-term returns on investment for our partners—in other words, providing more informative and actionable data summaries at a lower cost. In this work, we are investing in building a public data warehouse to create a low-cost tool that provides actionable insights into any public K-12 school in the U.S.

In this blog, Dana Robertson will provide the perspective from our Analyst team and Rob Little will provide the perspective from our Data Engineering team in tackling this public data warehouse build. Both teams prioritize data integrity and impact over all else, but divide and conquer when it comes to the analytics.

1. Where we began: Initial success sparked cross-team collaboration

Analyst Perspective

Our team’s priority is to ensure we process data across states and data types (think enrollment, assessment, graduation, absenteeism, etc.) in a way that is flexible, so that we retain as much valuable information as possible. At the same time, we also ensure stringent business rules are applied to allow us to combine data (both within state/across data types and across state/within data types) as seamlessly as possible. Once we got into the nitty gritty of the unique data structure and business rules in each state, it was clear that this is no easy feat.

The three key elements of our initial work in the public data realm included understanding business rules, enacting clear suppression rules, and building data analytics.

Business rules

Business rules that each state applies in either their collection or reporting methods may differ at several different levels, (1) between states, (2) within state/across data types, or even (3) within a singular state and data type but across years. We spend a great deal of time to understand the data to ensure we are manipulating it without creating bias. This requires a deep understanding of each particular file and understanding the goals of the individual who created it.

Suppression

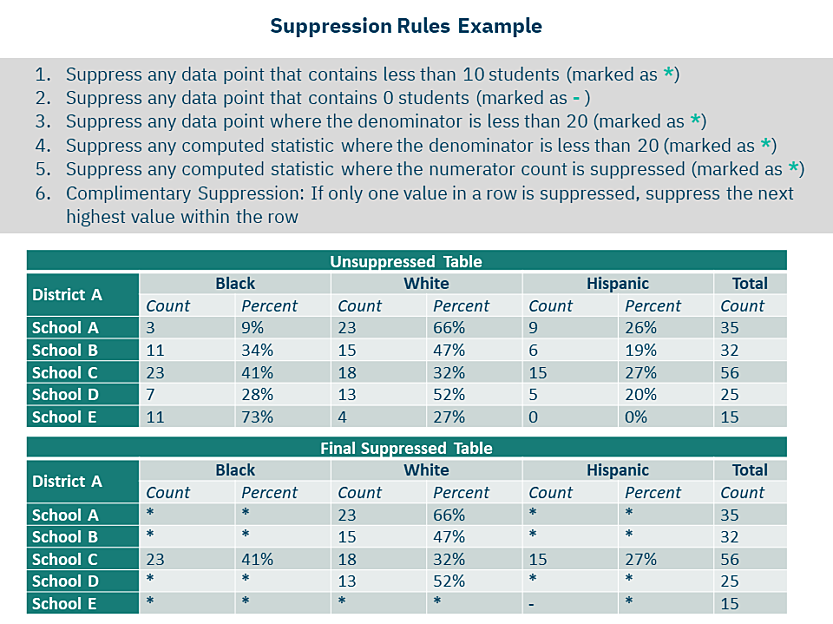

Suppression is an agency’s way of protecting the identity of their students. If a student group being reported on is so small that one could decipher or determine the identity of the underlying students feeding into a given figure, then the state will mask ("suppress") the data. We find it incredibly important to both understand and quantify the suppression we see within each data file. Below is an example of what suppression might look like in one state:

We are able to estimate for our partners the number of students we are unable to capture in our metrics at various levels (depending on the metric) due to suppression. This allows our partners to make an informed decision about how to rely on that metric, such as whether for purely informational or also actionable purposes. We believe that for our partners to fully understand our analytics, it is just as important to understand the limitations that exist.

Building data analytics

After this data processing stage, we take a series of steps to successfully combine our state data with federal sources to gain as much information as we can on school characteristics, location, etc. in each given school year. Then, we are able to build analytics and visuals. Although partners can simply look at the school data directly, EA is able to build standard or custom views on top of these data to suit a partner's needs.

Some standard options include:

- Looking at various traditional school districts and how they are performing relative to one another (or to the state itself)

- Geographically placing charter schools within these traditional school districts to examine performance across different school types

Some partner custom views may include (but certainly are not limited to):

- Tracking a portfolio of investment schools before and after investment, or over time

- Including custom groupings of schools, districts, or states dictated by partner needs for easy comparison

- Incorporating survey data or other custom data into metrics that rely primarily on publicly available data

Below are two views from one such custom dashboard we created for our partners at the School Superintendents Association:

Needing to scale

Our work began with 11 states and four data types, but the demand for this type of processed, consistent, and transparent data—and the resulting analytics—has grown much larger. Our partners wanted to expand the geographic locations and data types we work with, while increasing the school years included. The sheer volume of these data began to strain our existing tools for creating these metrics and storing the data in an efficient manner. This led to our need to bring in the expertise of our engineering team.

Engineering perspective

The engineering team at EA prioritizes building data systems that provide impact, security, and efficiency. These qualities are sometimes in tension with one another and so require thoughtful consideration of tradeoffs for each use case. For example, opening access to data quickly may provide short-term impact, but harm the long-term security and efficiency of the system. We firmly believe that a data warehouse derives value from its stability and extensibility, so our goal when we came into this project was to design for the long-term demands of the system.

Often, it can be difficult to forecast all of the data sources, analytical uses, or technical challenges of the future. In this case, we were lucky to inherit a strong data standard from the Analyst team. Having this standard greatly reduced the effort needed on our team’s part for data ingestion. This is generally why we believe in the adoption of data standards like Ed-Fi. In this case, we did not have the luxury of pulling from a standardized source system, so we leveraged our own data standard, created by the Analyst team.

With the strong foundation provided to us by the analysts, our two main tasks when beginning this work were to weigh our options when it came to technology (what tools are right for the job?) and design (how should the data be organized?). These considerations established a foundational blueprint for our work.

Technology considerations

Our priority was to select data warehousing tools that could coalesce many public sources into one shareable system. This system needed to share data securely while being easy to manage, so that we could keep costs down for our foundation and LEA partners, while giving them up-to-date access to millions of public data points. We also needed an orchestration tool to empower efficient development of analytics atop those data points. And as with any project at EA, we preferred tools that align with our open-source stance.

Design considerations

Beyond the tooling, we needed to plan for the design of the data warehouse itself. We considered how to transform available source data into analytics, and how to empower efficient querying by an analyst or dashboard developer. We thought carefully about the long-term potential of the warehouse, because a strong data model must be stable over time and extensible to new scopes of work.

2. Where we are now: Building for scale

Analyst perspective

We have expanded to working with more than seven types of data from more than 30 states. In doing so, we have developed a standard process from start to finish for data processing.

When we first begin working with a new data type, we conduct a period of data discovery across a sample of states. This allows us to gain a better understanding of the data points collected by each state and the format in which they are often reported.

We then move into building a skeleton of what our processed data should look like to ensure consistency not only across the sample of states investigated above, but also to expand to any state across the county.

Example: Think of college-going rates reported by a state. A state could choose to report only overall enrollment counts, split the data into two-year vs. four-year, in state vs. out-of-state, or examine something different altogether—such as differences by subgroups, college persistence, etc. Our structures allow all of these to be incorporated while maintaining a consistent format.

We then develop a common set of business rules for processing the given set of data types across all applicable locations. These rules are then implemented to ensure we can work with and aggregate the data in a way that does not bias the result.

Example: If we want to aggregate high school graduation rates across a specific set of schools within a state, but we do not have information about the cohort size at each school, EA has developed a process to best estimate this data point, given the data that are available, in an attempt to avoid biasing the results.

Finally, we take a step back and hypothesize or collaborate with existing partners as to what their needs and end goals may be in utilizing these data, so that we are assessing and providing the proper caveats for our partners. This often includes quantifying the suppression that may exist within the data, noting any state-specific data processing rules, and identifying large transitions that may occur within a state (such as state assessment transitions, changes in graduation criteria, etc.).

Engineering perspective

When we first came into this work, we focused on building a blueprint. Our next step was to put that blueprint into action. Based on the technical and design considerations listed above, we made the following decisions for our build:

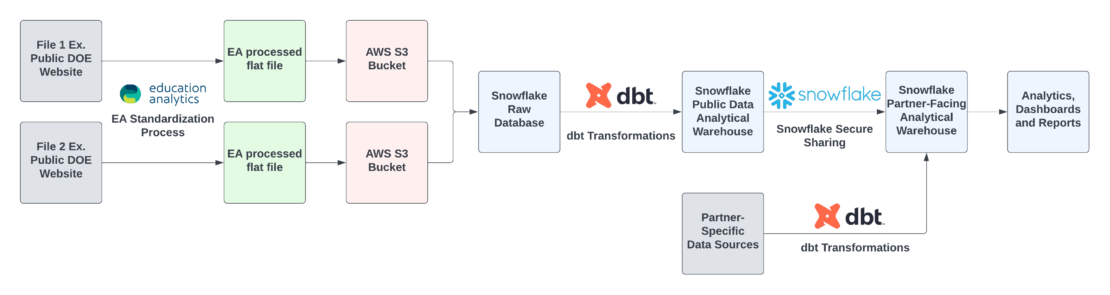

Technical decisions

We decided on a tech stack that includes AWS cloud services (S3), a Snowflake database, and dbt (Data Build Tool). We extract the source data processed by the Analyst team, store a record of each file in S3, then load the data into our Snowflake database. Once there, we utilize dbt to transform and reorganize the source data into an analytical data warehouse. We have built up infrastructure using Apache Airflow to run this extract-load-transform process on an automated schedule.

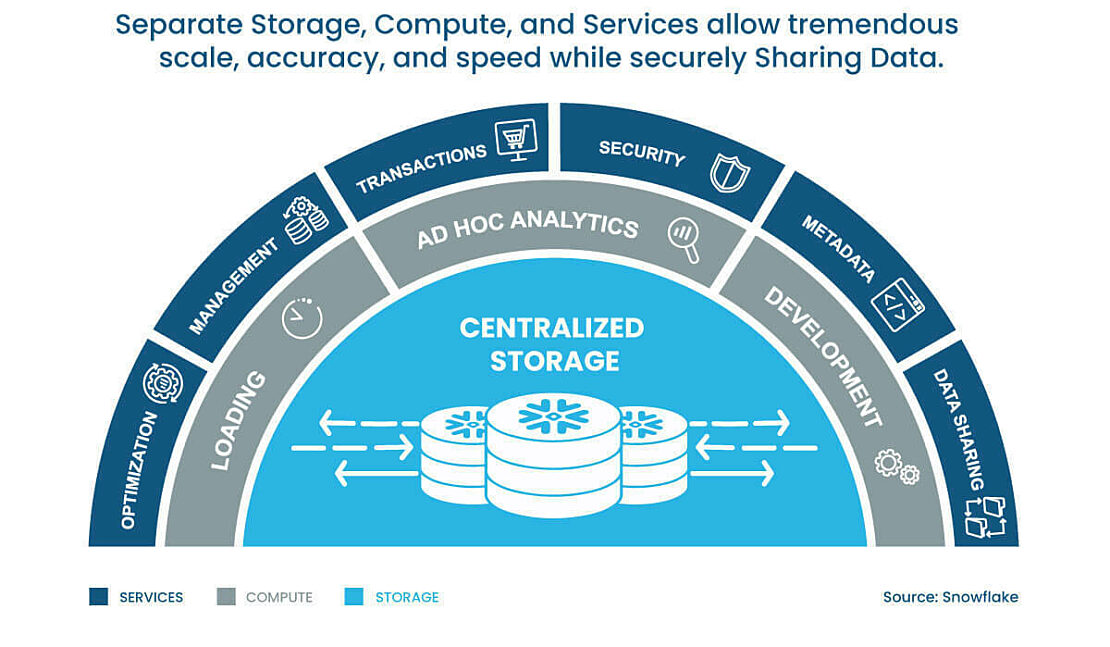

Like other big data tools, Snowflake is highly flexible and performant (meaning it quickly and efficiently performs SQL queries and tasks). Snowflake also has some unique capabilities that help facilitate our partners’ access to our public data warehouse. For example, we can enable Snowflake’s Secure Data Sharing to share pieces of the warehouse without paying for copies of the data. We can then develop custom features if a partner wants to combine the publicly available data with proprietary or unique analytics. While data security may be less of a concern with public data than student PII, maintaining best-in-class security functionality is fundamental to our organization’s mission. Snowflake’s advanced security features are one reason it is our database tool of choice.

We also have a love for dbt on the Data Engineering team at EA. Our colleague Jordan Mader wrote about it here (see the section titled, “dbt is great”) in a post about our Rally Analytics Platform. dbt has enabled easy and efficient development of our public data warehouse and the actionable analytics we continue to build atop it. It is also an open-source tool, which we are staunchly committed to at EA, because open-source communities democratize analytical development, and keep our partners from being locked into proprietary technical systems.

Design decisions

Once we decided on a tech stack, we next made some tough decisions regarding the organization of the warehouse. As Dana mentioned above, applying stringent business rules to a multitude of states is no easy goal. One challenge we often meet as engineers relates to a seemingly simple question: “What is a school?” You may say it is a physical building, or a state-designated entity, but those definitions can differ, especially when you look across geography and time. We struggled with different states’ definitions of school entity given the availability of many different school identifiers in the source data, but eventually we were able to align with federal NCES standards.

We now have a data warehouse built using accessible, efficient, and secure tools. It uses a national standard of school identity, which means we not only speak a common language across our partners, but also can build upon existing federal data. The data are organized in a dimensional warehouse model, which also makes analytics scalable.

3. Where we are headed: More data, more states

Education Analytics views this public data warehouse build as a fantastic opportunity to serve our mission, which aims to put useful analytics in the hands of those who can benefit—regardless of their resources—by significantly reducing the barrier to entry in terms of financial costs and obstacles inherent in the nature of public data itself. And as an organization that greatly benefits from open-source communities, we want to make this work public and open-source in hopes that our systems, code, successes, and failures are useful to others working in this space.

We hope to continue to expand our geographic reach while creating even more rigorous and meaningful metrics that will help decision makers understand their schools. We also plan to further develop useful visuals that allow these data to be digestible to audiences from diverse backgrounds.

Additionally, EA hopes to expand our data collection and integration beyond just state departments of education to include other resources such as Census data, National Student Clearinghouse data, and more.